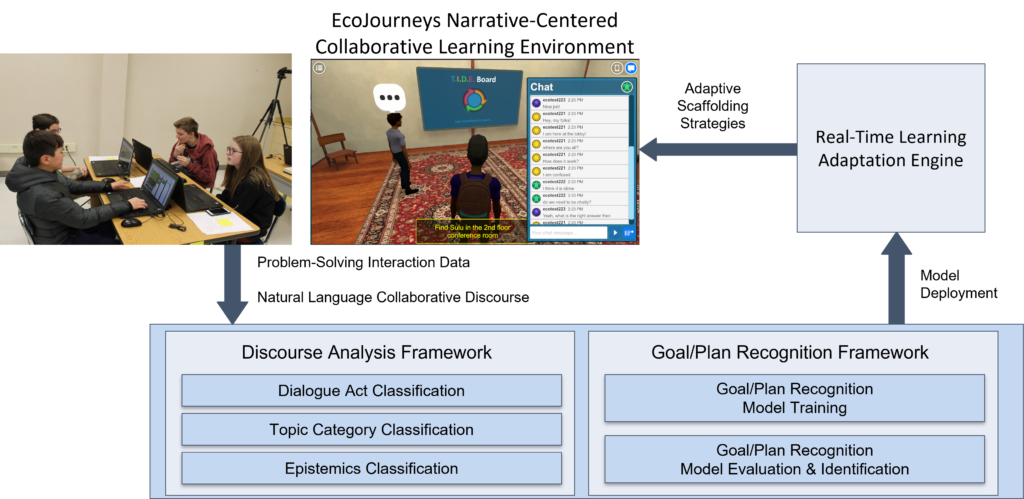

The EngageAI Institute team is engaged in three complementary efforts related to investigating deep learning techniques for analyzing multi-party dialogue between students during collaborative inquiry in AI-augmented learning environments:

- A joint team from NC State University and Indiana University investigated deep learning-based multi-party dialogue act recognition using text chat data from student collaborative learning interactions in the Crystal Island: EcoJourneys narrative-centered learning environment. The text chat data was annotated using a coding scheme developed by Hmelo-Silver and Barrows (2008) enumerating various question/response types related to student/group regulation, offering and receiving knowledge, as well as task-oriented dialogue. The annotations fell under two broad categories of topic- and epistemic-based labels. Using this dataset, the team examined the efficacy of transfer learning techniques with pre-trained large language models on dialogue act recognition of student and facilitator chat messages. Results showed that LLM-based methods outperformed the baseline methods with the T5 model using a context window of 3 and 5 (72% and 62% accuracy respectively) showing the largest increase in performance (4% topic, 3% epistemic).

- Working in coordination with UNC Senior Personnel Snigdha Chaturvedi, NC State research software engineer and computer science doctoral student Vikram Kumaran used the Crystal Island: EcoJourneys text chat dataset to investigate classroom dialogue act recognition from limited labeled data using a contrastive learning-based self-supervised approach called SSCon. The focus of this work was classifying the ‘topic’ labels assigned to student utterances. The dialogue act recognition models were tested in two few-shot settings: one with 10 examples per class and another with 70 examples per class. XGBoost using the same limited input data was utilized as a baseline. Results indicate that the SSCon model was able to outperform the baseline by at least 7% when trained with 70 examples (multi-class classification accuracy) and almost 40% over the baseline with just 10 examples per class.

- NCSU research scientist Yeojin Kim investigated multi-party dialog act recognition with pre-trained language models (T5, BERT, SBERT) and deep learning models (LSTMs, AutoEncoder) using a public classroom dialogue dataset, TalkMoves, which is a text-based conversation dataset between teachers and students in K-12 math classes. The main experimental finding from this work was that specifying the role of the speaker was more important than conversational history for increasing dialogue act recognition performance.

Upcoming Plans: The project team will continue to refine and improve deep learning-based methods for multi-party dialogue act recognition using recent and emerging developments in few-shot and zero-shot learning to enhance the performance of the models. In addition, the team plans to investigate the integration of dialogue act recognition models into the run-time Crystal Island: EcoJourneys narrative-centered learning environment to drive adaptive scaffolding that supports students’ collaborative learning processes.