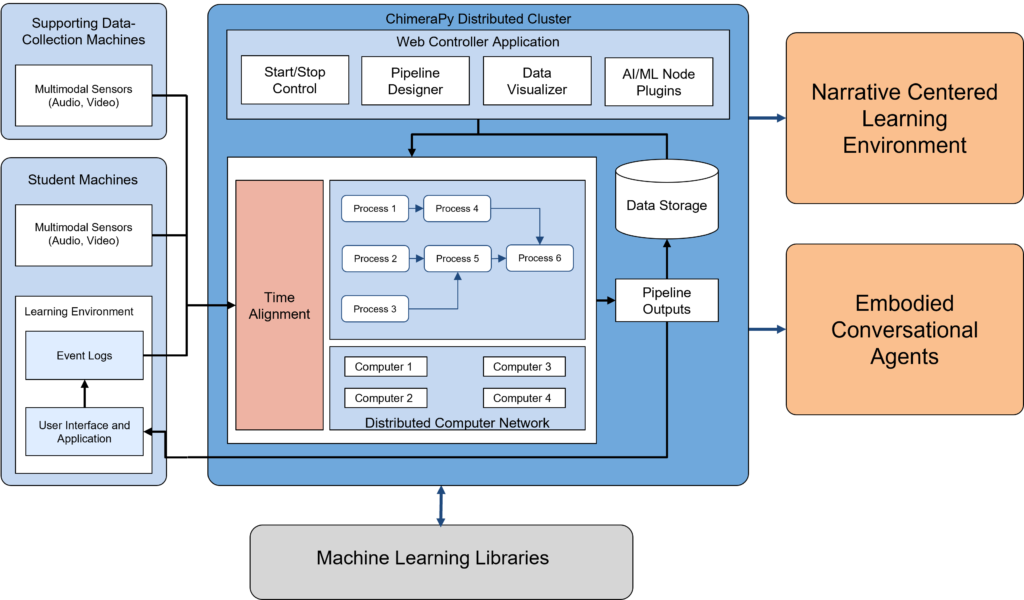

The Vanderbilt and NC State teams have worked together to create a prototype multimodal learning analytics pipeline for usage with the Institute’s AI-driven narrative-centered learning environments. The pipeline includes an implementation of ChimeraPy, which is a modular, component-based, distributed data collection networking architecture developed at Vanderbilt that supports the collection, alignment, and archiving of data from multiple sensing modalities as well as a flexible multi-processor computational architecture that supports the use of compute-intensive machine learning algorithms for multimodal data analysis. While still in its early stages, the implementation supports high-throughput communication in a distributed environment, where data streams from sensors (i.e., different modalities) can be collected, aligned and archived in a centralized fashion. A major focus of the team’s design and development efforts on the multimodal learning analytics pipeline has centered on three distinct use cases: 1) individual, 2) small-group, and 3) classroom-scale deployments across different educational contexts:

Upcoming Plans: There are several next steps for this work:

- The team is exploring the design and development of a web-based dashboard to control multimodal pipeline orchestration as well as to provide a data preview for calibrating hardware sensors.

- The team will continue to prototype and refine integration channels with the Institute’s AI-driven narrative centered learning environments. Currently, we are running experiments with data collected from a C2STEM study as well as an embodied learning study that was conducted at Vanderbilt with the GEM-STEP system in Fall 2022.

- The team plans to begin developing a repository of deep learning-powered multimodal learning analytics pipelines in ChimeraPy.