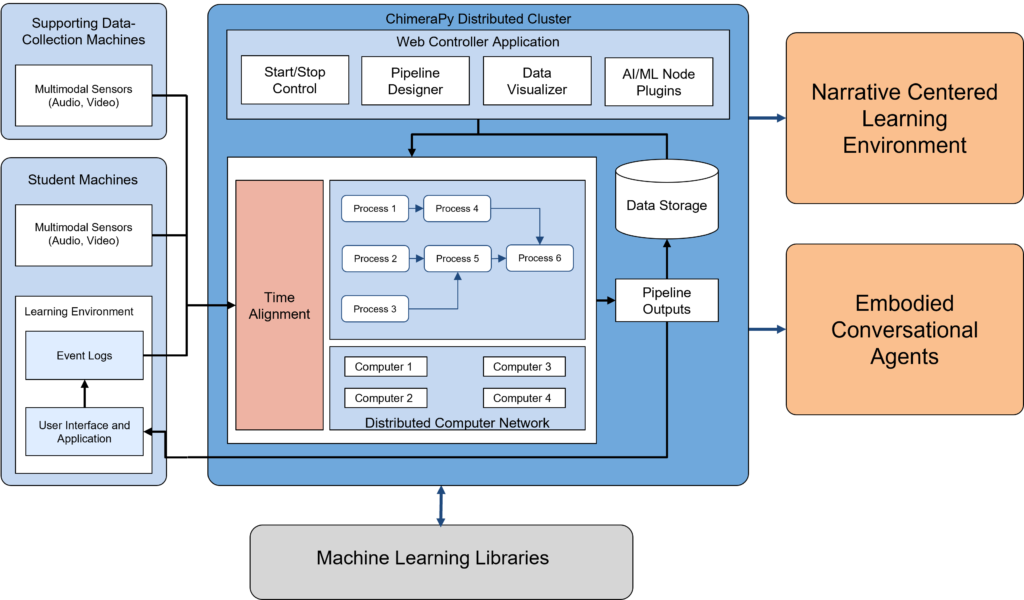

Multimodal learning analytics deepens our grasp of the learning process by adding context to the behaviors and thoughts of students and teachers. Various modalities like body language, emotions, eye movement, and speech represent complex signals of social communication and expression that are important to understanding how learning processes evolve. Traditional systems that rely solely on activity logs fall short in capturing these dynamics, particularly in group settings where numerous social signals are exchanged concurrently. The EngageAI team has developed methods to capture and align these diverse data streams with learning environment logs into a unified timeline for comprehensive analysis.

The EngageAI multimodal learning analytics platform leverages LiveKit client and server libraries, allowing it to be scalable, cloud-deployable, and secure using standard cybersecurity and encryption protocols. It integrates all essential cloud infrastructure components—authentication, storage, services, and databases—to deliver a comprehensive system that allows learning environments to utilize multimodal data from client devices like browsers and IoT. Designed to support various EngageAI projects through a single web gateway, the platform enables the creation of individual projects for each learning environment, complete with an admin/researcher dashboard for session and project management. As learning environments increasingly adopt web-based deployments for their scalability and impact, the team has developed client SDKs for JavaScript and Web-Unity to facilitate easier integration with our platform.

A pilot study using the multimodal learning analytics platform was conducted in early April 2024 with a classroom of students working in teams with the EcoJourneys collaborative narrative-centered learning environment. Data captured included video and audio streams from the students’ laptop webcams, alongside system logs. Additional recordings from tabletop microphones and classroom-wide cameras documented broader social interactions. All collected data was synchronized into a single timeline for detailed analysis. Throughout the study, the system effectively managed over 75 data streams and securely stored them in an S3 bucket. The integration architecture included dockerized containers for a LAN-based deployment of LiveKit, LiveKit-MMLA (API/Dashboard), EcoJourneys, and other components.

Upcoming Plans: The EngageAI team is focusing on three complementary objectives: (1) Enhancing integration with other EngageAI software, (2) Improving IoT device support, and (3) Fully incorporating LiveKit Agents functionalities. First, the team plans to work on integrating the multimodal learning analytics platform with other narrative-centered learning environments developed by the Institute, which will improve our multimodal data collection capabilities and allow for thorough testing of the pipeline prior to wider release. Second, although IoT devices currently stream data to our platform, they require manual intervention to connect to a session. The team aims to simplify this process to a one-time setup, allowing IoT devices to automatically join subsequent sessions. Lastly, the team intends to fully implement LiveKit Agents, a feature that facilitates the deployment of AI within a session, thereby simplifying the complexities associated with cloud-deploying AI models.