Authors: Tiffany Barnes

Key Ideas

- A new brief provides a framework, reflection maps, data design flows, and model cards to help identify gaps in ethical considerations for AI in education research

- Researchers and developers should have ethics and justice goals as well as learning goals throughout every phase of their work.

- Researchers have a responsibility to consider beneficence for all community members impacted by their work, particularly when studying AI in education.

Education researchers and developers across the country are frequently faced with the question of how to go about ensuring that the educational technology they are studying and creating is equitable for learners, teachers, and communities. It can be a complex assignment to embed in one’s work, which I am very familiar with in my 29 years of research experience in Computer Science and AI.

When the Community for Advancing Discovery Research in Education (CADRE) Committee on AI in STEM Education and Education Research decided to write a paper on this subject, I was very excited to share my thoughts. Ultimately, we released Toward Ethical and Just AI in Education Research, a brief offering guidance to researchers and developers for responsible AI research and implementation in educational settings.

We really wanted to encourage people that ethics is not something you do after the fact, but a process you do at every stage of a research project.

One of the topics that inspired me most, throughout writing this brief, was the concept that so much of the focus in developing research-based educational technology is getting to the end interface and building the underlying algorithms/AI. But there are so many other steps prior to that, which are just as important to consider. Throughout our brief, we encourage researchers and developers to say “let’s make sure we have a justice goal in addition to our learning goal.” In doing this, it will give them guiding principles throughout every step of the design, implementation, and analysis processes. For example: It might be easier to embed end-user input into the interface by accounting for whether students might respond better to an illustrated chatbot or a character that looks like their teacher. Taking ethics into account at every stage will pay off for both the researchers and end users of the technology.

Providing an Intro to Ethics

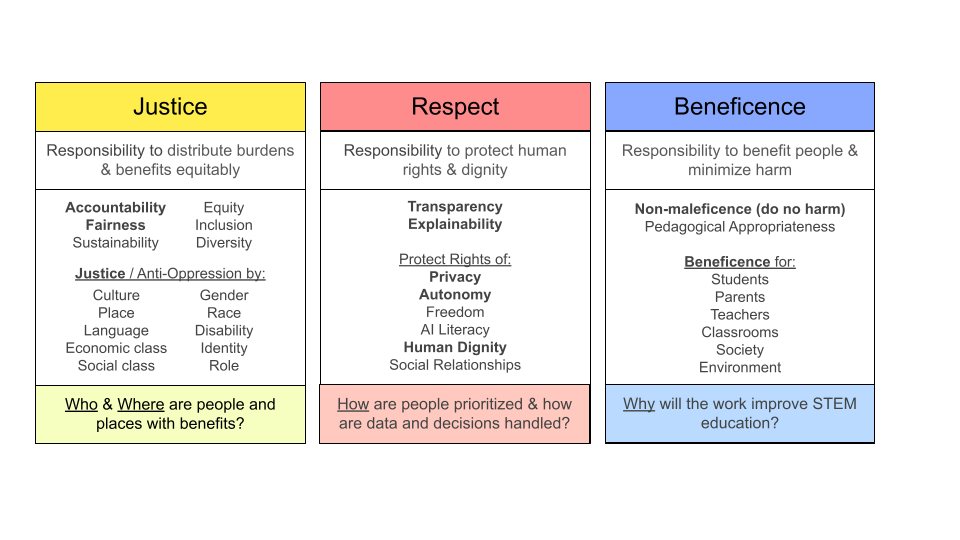

In the initial part of the report, we provide a brief intro to AI ethics principles for researchers, including broad definitions of respecting people (how we impact their communities and them as individuals). The figure below categorizes the Ethical AIED framework into overarching principles and guiding questions for AI ethics in education in ways that researchers will know.

One component of the framework that we sought to emphasize was the focus on justice. Before a researcher decides that something like AI is going to help students, we want them to first consider sustainability — including the harm to the oceans and our environment, and then other important elements of society and culture – including potential harm or exclusion of already-marginalized groups. It’s crucial that researchers respect the rights of the individuals (not just students, but their families, teachers, classrooms, and communities) and the social relationships that could be helped or harmed by implementing the technology. As these issues are multifold, our brief aims to support an ongoing reflective thought process through reflection maps that carry AI ethics questions throughout the design process.

I should note that it will be impossible to do well in every part of the Ethical AIED framework. Ethical AIED is a continuous process, not an outcome. That said, we hope that researchers and developers will center specific and measurable justice and ethics goals in their AI designs for education. We hope that our work, and all that is to come, can be a supportive stepping stone on the way towards ethical and just AI research in education.

Interrogate Systems Through AI and Data Design

If you think of your AI software as a data application, such as a personalized learning assistant, you’ll note how it lets you think about possibilities for the systems of oppression and power to change. An educational AI system will take in data about people, such as their language, behavior, and learning (like correctness). The designer of that system designs how that data will be transformed into personalized adaptations. With something like slang and vernacular that students might use to communicate with an AI chatbot, there are certain components of an algorithm that might associate their language as negative. In this example, just because someone is using language that may be perceived as negative in a different context, doesn’t mean the language actually is negative. The interpretation of the data may be promoting injustice. It’s also important to note that the more consequential the result is for the student, the more important it is to look out for behavior and linguistic bias, along with other injustices.”

Structuring Reflections

The project team doesn’t want to leave any researchers without any advice on next steps forward, so we included model cards within the brief. “Model cards…help identify gaps between an externally created model and AI needs within a project. As the research, software, data, and models evolve, tools such as these should be revisited throughout the AIED lifecycle to ensure that AIED systems are designed for justice while respecting and benefiting people in alignment with the ethical AIED framework” (Barnes et all.).

These cards provide a consistent set of questions so that if people adopt your AI models, they understand certain elements such as what your software originally intended to model. An example card is provided within the full brief.

Through CADRE and the EngageAI Institute, I hope to support continuous progress in education research. Contact cadre@edc.org or visit cadrek12.org for more information about the brief.

Resources

Barnes, T., Danish, J., Finkelstein, S., Molvig, O., Burriss, S., Humburg, M., Reichert, H., Limke, A. (2024). Toward Ethical and Just AI in Education Research. Community for Advancing Discovery Research in Education (CADRE). Education Development Center, Inc. Available online at: https://cadrek12.org/resources/toward-ethical-and-just-ai-education-research